The sonification of…

facial expressions

What happens when… we sonify facial expressions of performers or audience members using next generation technology?

This interactive single-channel video piece explores what happens when… the movement and facial expressions of a scary clown trigger sounds (music, fx, and voice-overs) in what appears to be a static video to complement and accentuate their performance. With headphones on, the viewer is actually an active participant because tracking technology is monitoring their head movement and facial expressions as well, triggering sounds and controlling how the video interacts with them.

facial expressions AS

MUSICAL INSTRUMENTS

Using machine learning, a camera tracks the head and facial feature movements of the individual in front of the camera. This data is then mined in real-time to extrapolate interesting characteristics to generate audio with. In the accompanying video, when the character closes their eyes, sounds associated with escapism play, e.g., kids having fun in a playground, chimes, etc. When they open their mouth a darker, scary sound is emitted. This video will be displayed on a monitor.

Hidden above the monitor, a camera points to the viewer who, without realizing it at first, is actually an active participant. The same tracking technology is used to generate another layer of audio and to control the non-linear playback of the video.

The performance duration is flexible and estimated to last ~5 mins.

collaborators

Collaborators include Alanna Herrera (ABQ), costume design by Erica Frank (Santa Fe - examples of Frank’s work included here), and technology and sound art by Drew Trujillo (ABQ).

the Sonification of FACIAL EXPRESSONS

(Proof of Concept Video)

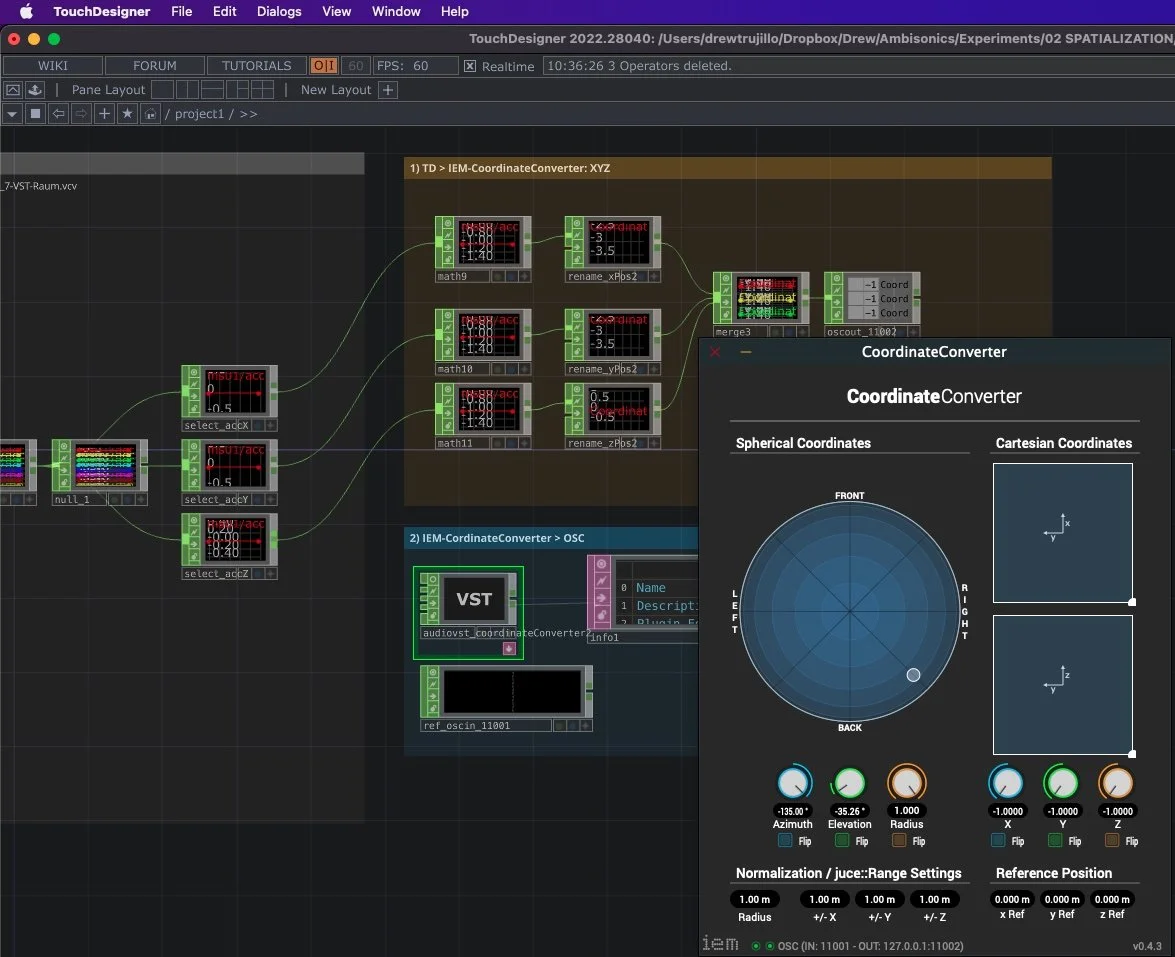

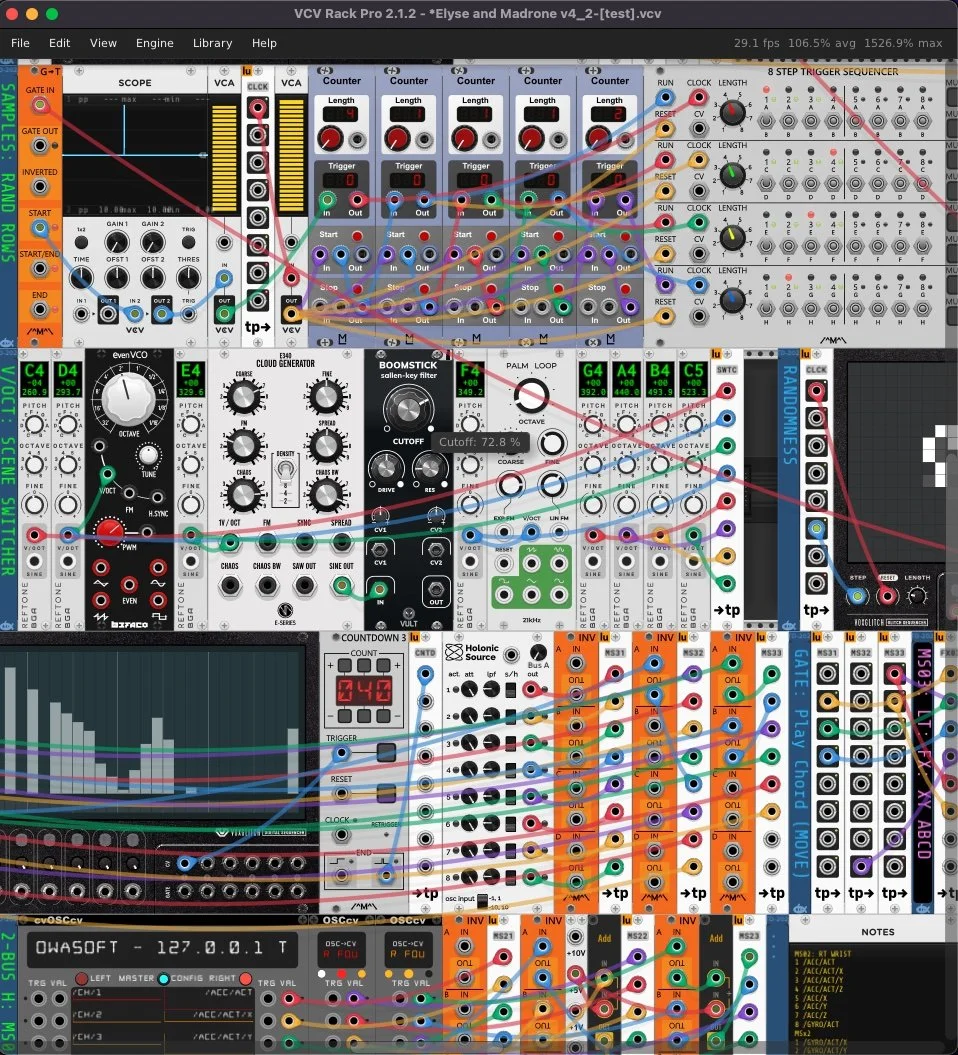

This video is an early proof-of-concept to demonstrate the use of the latest head-tracking and facial expression

technology used to trigger sounds, giving agency to and sonifying the performance of the filmed individual.